Gone are the days when you could drop-ship your way to success, customer experience and customer lifetime value becoming more immediate priorities. It’s still challenging to prove the value of that investment as opposed to customer acquisition; however, the game is clearly changing, prompting ecommerce brands to cherish their most valuable asset – customer loyalty.

User privacy and app tracking have been top-of-mind topics for the ecommerce advertising industry for the past years. Given the growing concerns of online shoppers about protecting their personal data and 56% of consumers wanting more control over it, big tech companies were determined to act.

These concerns reached a critical point when Apple announced the release of iOS 14 and its privacy features, changing the way apps collect user data. With over 1.8 billion active iPhones worldwide (in 2023) and expected low opt-in rates, the update was bound to considerably impact the $327,1 billion mobile advertising industry.

Ecommerce marketing needed to evolve, prioritizing retention and customer loyalty more than ever.

To better understand what it was all about, let’s take a closer look at the privacy updates in iOS 14, how they impacted the industry, and reflect on ways to face the changes still to come, given the ever-evolving landscape of privacy.

What do privacy updates mean to the industry?

Announced during the Annual Worldwide Developers Conference in June 2020 but rolled out starting with iOS 14.5 in April 2021, the heavily marketed update was supposed to give Apple consumers more control over their data. And while it had consumer privacy at heart, there was increasing concern about the impact on ecommerce, given the inevitably fewer data to use in ad targeting.

Since mobile apps use Apple’s IDFA (Identifier for Advertiser) to track behavior beyond the app in use, starting with iOS 14.5, tracking was contingent on the user’s explicit consent.

Changes inevitably affected platforms like Facebook, which collect data for targeting and advertising purposes, as end users are more likely to opt out of tracking prompts. Since Facebook is the #1 platform for brands to market their products online and generate sales, a devastating impact was expected, especially for small businesses. Specifically, a cut of over 60% in their sales for every dollar spent was predicted.

What’s more, Google announced it will stop using third-party cookies in Chrome by the end of 2023, to then postpone it to 2024. With the cookie discard around the corner, online marketing was about to be faced with some of the biggest changes in years.

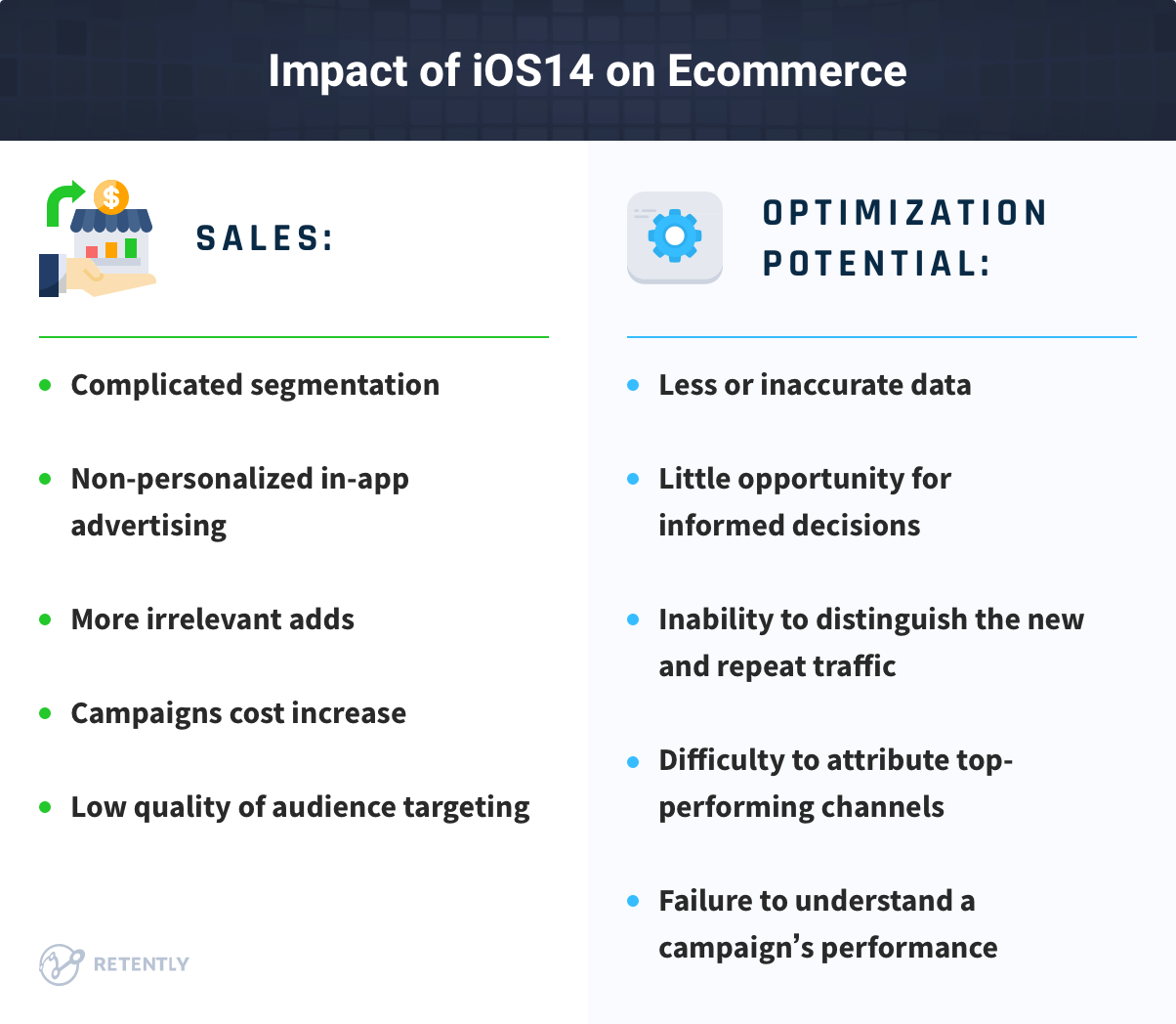

But what did it mean for ecommerce? No less than:

- Decrease in sales: Due to the limited ability to run personalized ads and effectively understand, reach and engage with the target audience, growth became challenging. With no location or demographic targeting data available, segmentation is more complicated, while in-app advertising is less personalized, with users being served with more but irrelevant ads. This not only determines the increasing cost of ad campaigns but also seems to annoy 90% of consumers. The decreased quality of the audience targeting inevitably took down sales.

- Limited optimization potential: It became more challenging to understand campaigns’ performance, distinguish between new and repeat traffic, report on conversions and find out where top-performing users are coming from. With less or inaccurate data, there is little to no opportunity to make informed decisions.

How can brands counter the changes?

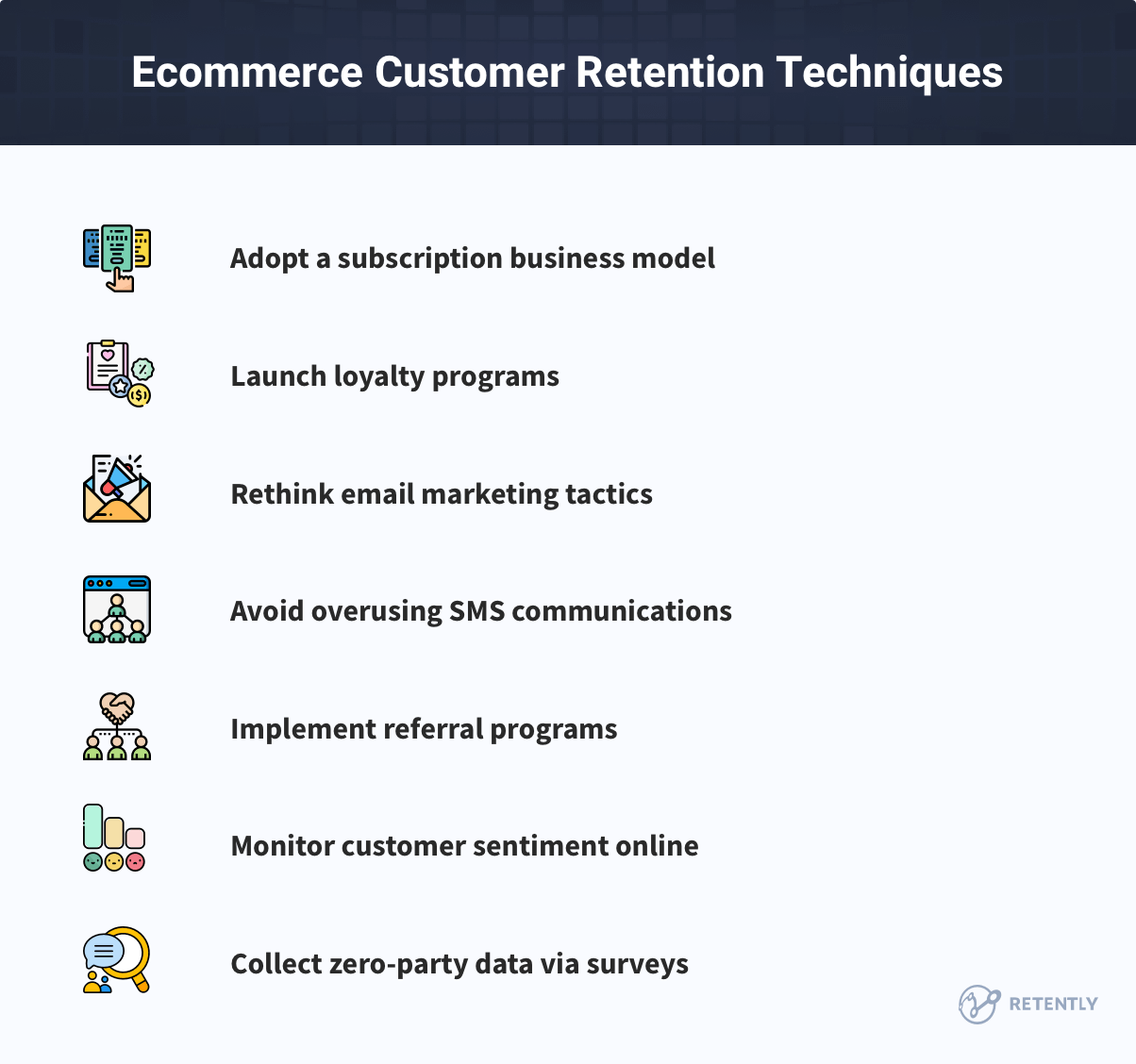

With the CAC going up and increasing competition, ecommerce marketers were forced to put customer retention strategies up front. Although ad companies started to look for workarounds to mitigate the consequences, businesses had to resort to new techniques that would make their customers come back and repurchase. Among the most popular solutions are:

1. Adopt a subscription business model

Adopting a subscription business model can be a great way for ecommerce companies to generate recurring revenue and create a steady stream of income from customers who have committed to regularly buying. It involves charging a recurring fee, typically on a monthly or annual basis.

Although the subscription model is not largely available for ecommerce & retail, data shows that it has grown by more than 100% a year over the past five years. Apart from providing a predictable source of income that can help to smooth out fluctuations in sales, it allows retaining customers for longer and wins you time to figure out ways to nurture their loyalty long-term.

These buyers are more likely to be engaged with a brand and can be provided with unique perks to encourage retention. Additionally, subscription models can reduce customer acquisition costs, as recurring clients are often less expensive to retain than acquiring new ones.

To adopt a subscription business model, ecommerce companies can begin by identifying the products or services that are well-suited for a recurring purchase. This could include items like monthly beauty boxes, food delivery, clothing subscriptions, or even access to exclusive content and features.

Still, there is one aspect to keep in mind when adopting a subscription model and namely envisaging flexible order management options. According to “The State of Subscription Commerce” report, in 2022, 35% of subscribers made changes to their orders; among those, 39% skipped an order, while 44% swapped products.

The ability to reduce spending through flexible subscription management will inevitably increase retention and LTV, as seen in a study by McKinsey where 74% of respondents adjusted their shopping habits to save money. Allowing subscribers to control spending will lead to long-term value for your ecommerce, building trust and strengthening their loyalty to your brand.

2. Launch loyalty programs

For 78% of US consumers, a good loyalty program encourages them to buy more, while 72% are more likely to recommend brands to others. Implementing such a program would include easily redeemable rewards at check-out, increasing discounts for additional purchases, limited-time discount codes, early access to new products, etc. Used timely, these can increase repeat purchases and boost retention by providing a reason for ecommerce customers to stick around.

Hence, one of the key benefits of such a program is that it helps build a loyal customer base. By rewarding clients for their repeat purchases, brands motivate and retain them for longer. Additionally, it can also help attract new buyers, as potential customers may be more likely to shop with a brand that offers a loyalty program.

Another benefit of loyalty programs refers to the valuable customer data they can surface. By tracking customer purchases and rewards, brands can gain insights into customer behavior and preferences. This data can then be used to create more personalized marketing campaigns and improve the customer experience.

3. Bring more personalization into the email marketing tactics

It seems the best time to build your email subscriber list and adapt to a customer-centric approach that provides value. Instead of overwhelming customers with offers in the hope that they will stick around, retarget them with personalized email campaigns based on behavior and needs.

With consent-based marketing on the rise, brands can no longer afford to send loads of emails without trying to understand what would be more relevant for buyers throughout their journey. Since the new privacy restrictions, the ecommerce industry is forced to think more about marketing in terms of the customer journey, hence understanding the key buyer’s lifecycle stages is a must retention-wise.

Personalization is an efficient tool for building stronger relationships with customers and driving repeat business. Segmenting the email list to create targeted campaigns that speak directly to the specific needs and interests of your audience, using personalization variables and dynamic content are some of the effective ways to incorporate more customization into email marketing tactics.

4. Align SMS communication with customer behavior

With an open rate as high as 98%, SMS marketing is effectively used to encourage repeat sales, share updates on shipment and delivery or let customers take quick actions. However, since SMS is the most intimate form of communication typically reserved for friends and family, the shared info should be non-intrusive, conversational, and incredibly relevant for the customer to serve its purpose. SMS should not be just an extension of email but rather deliver value that cannot be found elsewhere.

So, the idea is to not obsess over revenue but have customer lifetime value in mind. Brands successfully use SMS chatbots that can handle these communications without compromising the brand’s standards, sharing the right message at the right time with the targeted subscriber cohort based on prebuilt workflows. These refer to post-purchase flows, welcome or abandoned cart series – all meant to make the customer feel cared for.

It’s also important to give customers the option to opt out of SMS communications and promptly honor requests to unsubscribe. This will help to build trust and maintain a positive relationship with customers.

5. Implement referral programs

Implementing referral programs is a cost-effective way to acquire new customers, as the existing audience is doing the heavy lifting of bringing in new business. Incentivize your most active customers to share their experiences via reviews or general word-of-mouth. Being enthusiastic about your products, they would gladly help you grow your business by increasing the audience. Market leaders have excelled at this, so you can check some advocacy campaign examples for inspiration.

This type of word-of-mouth marketing can be extremely powerful, as people are more likely to trust recommendations from friends and family than they are to trust traditional advertising.

Moreover, by rewarding customers for their referrals, brands can build a sense of community and loyalty among their customers. This can lead to increased customer satisfaction and retention, which can ultimately drive more revenue.

6. Monitor customer sentiment online

Monitoring customer sentiment online is an important retention strategy for ecommerce companies looking to understand their customers and ultimately impact purchase intent. By tracking customer sentiment, brands can identify areas where they are excelling and areas where they need to improve.

You might need someone in the team responsible for following anything from a tweet to an Instagram post, a review, or comments your target audience made about your brand or the industry as a whole. When it comes to retail & ecommerce, reviews turn out to be extremely impactful on trust and purchase intent, with 88% of buyers using them as personal recommendations. Hence staying ahead of your brand’s online reputation is not a choice but a necessity.

One way to monitor customer sentiment online is by using social media listening tools. These tools allow businesses to track mentions of their brand and products across various social media platforms, and gain insights into the sentiment of those mentions. For example, if a customer posts a positive review of a product, that can be an indicator that the customer is likely to repurchase in the future.

Another method is tracking customer reviews on ecommerce platforms such as Amazon, or similar review sites. For example, if customers are consistently leaving negative reviews about a particular product, it might be a sign that the quality of that product needs to be improved.

7. Collect zero-party data

Data ambiguity introduced a lot of guesswork, forcing online businesses to look for other tactics to efficiently approach targeting and performance, shifting the focus from third-party data to the one who’s paying their bills – the customer.

Rather than simply chasing profits, brands must now go above and beyond to deliver value-added customer experiences and create personal connections. By earning loyalty, they can boost repeat business. And this can be done only by going back to the source and getting acquainted with it by directly collecting customer-provided data via quizzes and customer surveys. While we advocate for a combined strategy, we highly recommend NPS surveys as they are broader in scope and can look into both, retention and acquisition potential.

Collecting zero-party data is a valuable strategy for ecommerce to elevate the CX and build trust. By understanding customer preferences, businesses can create personalized and relevant experiences for their customers, gain a deeper understanding of their audience, and improve their overall marketing strategy. Additionally, brands can build stronger, more sustainable relationships with their customers in the long run.

With the loads of surveys you might need sending and the more feedback you’ll be receiving, using specialized NPS survey software – that enables you to automate many tasks and integrate with various systems – is a solution worth considering. It will help you run multiple surveys, each focused on relevant aspects of the customer experience.

Is there life post-iOS 14?

No doubt, Apple’s iOS changes have stirred some panic among sellers. But with change comes opportunity, as third-party data is traded for a more reliable source – customer-provided data.

To secure retention and growth, your ecommerce business needs to look beyond the transaction and keep an ongoing dialogue with the audience. By focusing on zero-party data, building genuine relationships with customers, and experimenting with retention techniques, brands can adapt and continue to reach their target audience in a more privacy-aware ecosystem.

By using CX survey software like Retently, you can collect more relevant data and leverage it to engage customers with frictionless interactions and foster brand loyalty.

Alex Bitca

Alex Bitca

Greg Raileanu

Greg Raileanu